Download MSc Thesis VIBOT Sign Language Translator using

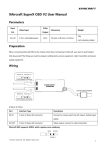

Transcript