Download Netica-J Reference Manual

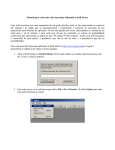

Transcript