Download Cypress CSC-1200T User`s guide

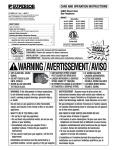

Transcript