Download SPSS Base 15.0 User's Guide

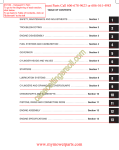

Transcript