Download AutoQuant 8.0.2 Suite User`s Manual

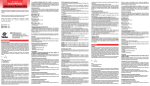

Transcript