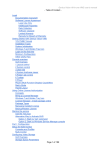

Download URSIM Reference Manual Abstract

Transcript