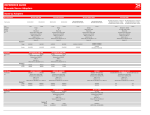

Download Dell PowerEdge M1000E BladeIO Guide

Transcript