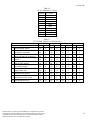

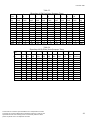

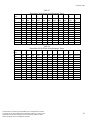

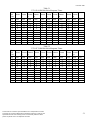

Download Voice Recognition Evaluation Report

Transcript