Download User`s Guide - Chasqueweb

Transcript

PIM 2.2

The Parallel Iterative Methods package for

Systems of Linear Equations

User's Guide

(Fortran 77 version)

Rudnei Dias da Cunha

Mathematics Institute and National Supercomputing Centre

Universidade Federal do Rio Grande do Sul

Brasil

Tim Hopkins

Computing Laboratory

University of Kent at Canterbury

United Kingdom

Abstract

We describe PIM (Parallel Iterative Methods), a collection of Fortran 77 routines to

solve systems of linear equations on parallel computers using iterative methods.

A number of iterative methods for symmetric and nonsymmetric systems are available, including Conjugate-Gradients (CG), Bi-Conjugate-Gradients (Bi-CG), ConjugateGradients squared (CGS), the stabilised version of Bi-Conjugate-Gradients (Bi-CGSTAB),

the restarted stabilised version of Bi-Conjugate-Gradients (RBi-CGSTAB), generalised minimal residual (GMRES), generalised conjugate residual (GCR), normal equation solvers

(CGNR and CGNE), quasi-minimal residual (QMR), transpose-free quasi-minimal residual

(TFQMR) and Chebyshev acceleration.

The PIM routines can be used with user-supplied preconditioners, and left-, right- or

symmetric-preconditioning are supported. Several stopping criteria can be chosen by the

user.

In this user's guide we present a brief overview of the iterative methods and algorithms

available. The use of PIM is introduced via examples. We also present some results obtained

with PIM concerning the selection of stopping criteria and parallel scalability. A reference

manual can be found at the end of this report with specic details of the routines and

parameters.

Contents

1 Introduction

2 An overview of the iterative methods

CG : : : : : : : : : : : : : : : : : : :

CG with eigenvalues estimation : : :

CGNR and CGNE : : : : : : : : : :

Bi-CG : : : : : : : : : : : : : : : : :

CGS : : : : : : : : : : : : : : : : : :

Bi-CGSTAB : : : : : : : : : : : : :

RBi-CGSTAB : : : : : : : : : : : : :

GMRES : : : : : : : : : : : : : : : :

GMRES with eigenvalues estimation

GCR : : : : : : : : : : : : : : : : : :

QMR : : : : : : : : : : : : : : : : :

TFQMR : : : : : : : : : : : : : : : :

Chebyshev acceleration : : : : : : :

3 Internal details of PIM

3.1

3.2

3.3

3.4

3.5

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

Supported architectures and environments : : : : : : :

Parallel programming model : : : : : : : : : : : : : : :

Data partitioning : : : : : : : : : : : : : : : : : : : : :

Increasing the parallel scalability of iterative methods

Stopping criteria : : : : : : : : : : : : : : : : : : : : :

4 Using PIM

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

4.1 Naming convention of routines : : : : : : : : : : : : : : : : :

4.2 Obtaining PIM : : : : : : : : : : : : : : : : : : : : : : : : : :

4.3 Installing PIM : : : : : : : : : : : : : : : : : : : : : : : : : :

Building the PIM core functions : : : : : : : : : : : :

Building the examples : : : : : : : : : : : : : : : : : :

Cleaning-up : : : : : : : : : : : : : : : : : : : : : : : :

Using PIM in your application : : : : : : : : : : : : :

4.4 Calling a PIM iterative method routine : : : : : : : : : : : :

4.5 External routines : : : : : : : : : : : : : : : : : : : : : : : : :

Matrix-vector product : : : : : : : : : : : : : : : : : :

Preconditioning : : : : : : : : : : : : : : : : : : : : : :

Inner-products, vector norms and global accumulation

2

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

5

7

7

8

9

9

10

10

10

10

11

11

11

11

12

13

13

14

14

15

16

17

17

17

18

18

19

20

21

21

22

22

23

24

Monitoring the iterations : : : : : : : : : : : : : : : : : :

4.6 Example programs : : : : : : : : : : : : : : : : : : : : : : : : : :

4.6.1 Eigenvalues estimation and Chebyshev acceleration : : : :

4.6.2 Dense storage : : : : : : : : : : : : : : : : : : : : : : : : :

4.6.3 PDE storage : : : : : : : : : : : : : : : : : : : : : : : : :

A matrix-vector product for parallel vector architectures :

4.6.4 Preconditioners : : : : : : : : : : : : : : : : : : : : : : : :

4.6.5 Results : : : : : : : : : : : : : : : : : : : : : : : : : : : :

Stopping criteria : : : : : : : : : : : : : : : : : : : : : : :

General results : : : : : : : : : : : : : : : : : : : : : : : :

Scalability : : : : : : : : : : : : : : : : : : : : : : : : : : :

5 Summary

References

A Reference manual

A.1 Description of parameters : : : : : : : : : : : : :

A.2 External routines : : : : : : : : : : : : : : : : : :

Note : : : : : : : : : : : : : : : : : : : : :

Matrix-vector product v = Au : : : : : : :

Transpose matrix-vector product v = AT u

Left preconditioning v = Qu : : : : : : : :

Right preconditioning v = Qu : : : : : : :

Parallel sum : : : : : : : : : : : : : : : :

Parallel vector norm : : : : : : : : : : : :

Monitoring routine : : : : : : : : : : : : :

A.3 PIM CG : : : : : : : : : : : : : : : : : : : : : : : :

A.4 PIM CGEV : : : : : : : : : : : : : : : : : : : : : :

A.5 PIM CGNR : : : : : : : : : : : : : : : : : : : : : :

A.6 PIM CGNE : : : : : : : : : : : : : : : : : : : : : :

A.7 PIM BICG : : : : : : : : : : : : : : : : : : : : : :

A.8 PIM CGS : : : : : : : : : : : : : : : : : : : : : : :

A.9 PIM BICGSTAB : : : : : : : : : : : : : : : : : : : :

A.10 PIM RBICGSTAB : : : : : : : : : : : : : : : : : : :

A.11 PIM RGMRES : : : : : : : : : : : : : : : : : : : : :

A.12 PIM RGMRESEV : : : : : : : : : : : : : : : : : : : :

A.13 PIM RGCR : : : : : : : : : : : : : : : : : : : : : :

A.14 PIM QMR : : : : : : : : : : : : : : : : : : : : : : :

3

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

26

27

29

30

30

34

35

36

36

36

37

37

40

44

45

47

47

47

47

47

47

48

48

48

49

51

53

55

57

59

61

63

65

67

69

71

A.15 PIM TFQMR : : :

A.16 PIM CHEBYSHEV

A.17 PIM SETPAR : :

A.18 PIM PRTPAR : :

A.19 INIT : : : : :

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

4

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

:

73

75

77

78

79

1 Introduction

The Parallel Iterative Methods (PIM) is a collection of Fortran 77 routines designed to solve

systems of linear equations (SLEs) on parallel computers using a variety of iterative methods.

PIM oers a number of iterative methods, including

Conjugate-Gradients (CG) [29],

Conjugate-Gradients for normal equations with minimisation of the residual norm

(CGNR) [35],

Conjugate-Gradients for normal equations with minimisation of the error norm (CGNE)

[11],

Bi-Conjugate-Gradients (Bi-CG) [22],

Conjugate-Gradients squared (CGS) [44],

the stabilised version of Bi-Conjugate-Gradients (Bi-CGSTAB) [46],

the restarted, stabilised version of Bi-Conjugate-Gradients (RBi-CGSTAB) [43],

the restarted, generalised minimal residual (RGMRES) [42],

the restarted, generalised conjugate residual (RGCR) [20],

the highly-parallel algorithm for the quasi-minimal residual (QMR) method, recently proposed by Bucker and Sauren [8],

the transpose-free quasi-minimal residual (TFQMR) [24] and

Chebyshev acceleration [32].

The routines allow the use of preconditioners; the user may choose to use left-, right- or

symmetric-preconditioning. Several stopping criteria are also available.

PIM was developed with two main goals

1. To allow the user complete freedom with respect to the matrix storage, access and partitioning;

2. To achieve portability across a variety of parallel architectures and programming environments.

These goals are achieved by hiding from the PIM routines the specic details concerning the

computation of the following three linear algebra operations

5

1. Matrix-vector (and transpose-matrix-vector) product

2. Preconditioning step

3. Inner-products and vector norm

Routines to compute these operations need to be provided by the user. Many vendors supply

their own, optimised linear algebra routines which the user may want to use.

A number of packages for the iterative solution of linear systems are available including

ITPACK [30] and NSPCG [38]. PIM diers from these packages in three main aspects. First,

while ITPACK and NSPCG may be used on a parallel vector supercomputer like a Cray Y-MP,

there are no versions of these packages available for distributed-memory parallel computers.

Second, there is no debugging support; this is dictated by the fact that in some multiprocessing

environments parallel I/O is not available. The third aspect is that we do not provide a collection

of preconditioners but leave the responsibility of providing the appropriate routines to the user.

In this sense, PIM has many similarities to a proposed standard for iterative linear solvers,

by Ashby and Seager [5]. In that proposal, the user supplies the matrix-vector product and

preconditioning routines. We believe that their proposed standard satises many of the needs

of the scientic community as, drawing on its concepts, we have been able to provide software

that has been used in a variety of parallel and sequential environments. PIM does not always

follow the proposal especially with respect to the format of the matrix-vector product routines

and the lack of debugging support.

Due to the openness of the design of PIM, it is also possible to use it on a sequential machine.

In this case, the user can take advantage of the BLAS [16] to compute the above operations.

This characteristic is important for testing purposes; once the user is satised that the selection

of preconditioners and stopping criteria are suitable, the computation can be accelerated by

using appropriate parallel versions of the three linear algebra operations mentioned above.

A package similar to PIM is the Simplied Linear Equation Solvers (SLES) by Gropp and

Smith [31], part of the PETSc project. In SLES the user has a number of iterative methods (CG,

CGS, Bi-CGSTAB, two variants of the transpose-free QMR, restarted GMRES, Chebyshev and

Richardson) which can be used together with built-in preconditioners and can be executed

either sequentially or in parallel. The package may be used with any data representation of

the matrix and vectors with some routines being provided to create matrices dynamically in

its internal format (a feature found on ITPACK). The user can also extend SLES in the sense

that it can provide new routines for preconditioners and iterative methods without modifying

SLES. It is also possible to debug and monitor the performance of a SLES routine.

Portability of code across dierent multiprocessor platforms is a very important issue. For

distributed-memory multiprocessor computers, a number of public-domain software libraries

have appeared, including PVM [28], TCGMSG [33], NXLIB [45], p4 [9] (the latter with support

for shared-memory programming). These libraries are available on a number of architectures

6

making it possible to port applications between dierent parallel computers with few (if any)

modications to the code being necessary. In 1993 the \Message-Passing Interface Forum", a

consortium of academia and vendors, drawing on the experiences of users of those and other

libraries, dened a standard interface for message-passing operations, called MPI [23]. Today we have available implementations of MPI built on top of other, existing libraries, like

the CHIMP/MPI library developed at the Edinburgh Parallel Computer Centre [7], and the

Unify project [10] which provides an MPI interface on top of PVM. It is expected that native

implementations will be available soon. In the previous releases of PIM (1.0 and 1.1) we had

distributed examples using PVM, TCGMSG, p4 and NXLIB; however from this release onwards

we will support only PVM, the \de-facto" standard for message-passing, and MPI.

We would like to mention two projects which we believe can be used together with PIM.

The rst is the proposed standard for a user-level sparse BLAS by Du et al. [19] and Heroux

[34]. This standard addresses the common problem of accessing and storing sparse matrices in

the context of the BLAS routines; such routines could then be called by the user in conjunction

with a PIM routine. The second is the BLACS project by Dongarra et al. [17] which provides

routines to perform distributed operations over matrices using PVM 3.1.

2 An overview of the iterative methods

How to choose an iterative method from the many available is still an open question, since any

one of these methods may solve a particular system in very few iterations while diverging on

another. In this section we provide a brief overview of the iterative methods present in PIM.

More details are available in the works of Ashby et al. [4], Saad [40][41], Nachtigal et al. [37],

Freund et al. [25][26] and Barrett et al. [6].

We introduce the following notation. CG, Bi-CG, CGS, Bi-CGSTAB, restarted GMRES,

restarted GCR and TFQMR solve a non-singular system of n linear equations of the form

Q1AQ2 x = Q1b

where Q1 and Q2 are the preconditioning matrices. For CGNR, the system solved is

Q1AT AQ2 x = Q1AT b

and for CGNE we solve the system

Q1AAT Q2x = Q1b

(1)

(2)

(3)

CG The CG method is used mainly to solve Hermitian positive-denite (HPD) systems. The

method minimises the residual in the A-norm and in nite-precision arithmetic it terminates in

at most n iterations. The method does not require the coecient matrix; only the result of a

7

matrix-vector product Au is needed. It also requires a relatively small number of vectors to be

stored per iteration since its iterates can be expressed by short, three-term vector recurrences.

With suitable preconditioners, CG can be used to solve nonsymmetric systems. Holter

et al. [36] have solved a number of problems arising from the modelling of groundwater ow

via nite-dierences discretisations of the two-dimensional diusion equation. The properties

of the model led to systems where the coecient matrix was very ill-conditioned; incomplete

factorisations and least-squares polynomial preconditioners were used to solve these systems.

Hyperbolic equations of the form

@u + @u + @u = f (x; y; t)

@t 1 @x 2 @y

have been solved with CG using a Neumann polynomial approximation to A;1 as a preconditioner [12].

CG with eigenvalues estimation An important characteristic of CG is its connection to

the Lanczos method [29] which allows us to obtain estimates of the eigenvalues of Q1AQ2

with only a little extra work per iteration. These estimates, 1 and n, are obtained from

the Lanczos tridiagonal matrix Tk whose entries are generated during the iterations of the

CG method [29, pp. 475-480, 523-524]. If we dene the matrices = diag(0; 1 ; : : : ; k;1 ),

Gk = diag(0; 1; : : : ; k;1) and

2

6

6

6

Bk = 666

6

4

1 ;2

1 ;3

1 ...

. . . ;

k

1

3

7

7

7

7

7

7

7

5

where i = jj ri jj2, ri is the residual at the i-th iteration, i = pTi Api and i = riT ri =riT;1ri;1

are generated via the CG iterations (at no extra cost), we obtain the Lanczos's matrix via the

relation

Tk = ;1BkT Gk Bk ;1

(4)

Due to the structure of the matrices Bk , and Gk , the matrix Tk can be easily updated during

the CG iterations. The general formula for Tk is

ai = (i2 i;2 + i;1)=2i;1 ; 1 = 0; i = 1; 2; : : : ; k

bi = ;i;1i+1=(i;1i ); i = 1; 2; : : : ; k ; 1

8

where ai and bi are the elements along the diagonal and subdiagonal of Tk respectively.

The strategy employed to obtain the eigenvalue estimates is based on Sturm sequences [29,

pp. 437-439]. For the matrix T2, obtained during the rst iteration of CG, the eigenvalues are

obtained directly from the quadratic equation derived from p() = det(T2 ; I ). We also set

an interval [c; d] = [1; n].

For the next iterations, we update the interval [c; d] using Gerschgorin's theorem. This is

easily accomplished since at each iteration only two new values are added to Tk to give Tk+1 ;

the updated interval is then

c = min(c; jak j ; jbk;1 j ; jbk j; jak+1j ; jbk j);

d = max(d; jak j + jbk;1 j + jbk j; jak+1j + jbk j)

The new estimates for the extreme eigenvalues are then computed using a bisection routine

applied to the polynomial p() = det(Tk+1 ; I ) which is computed via a recurrence expression

[29, pp. 437]. The intervals [c; 1] and [n; d] are used in the bisection routine to nd the new

estimates of 1 and n respectively.

A possible use of this routine would be to employ adaptive polynomial preconditioners (see [2]

and [3]) where at each iteration information about the extreme eigenvalues of Q1AQ2 is obtained

and the polynomial preconditioner is modied to represent a more accurate approximation to

A;1. This routine can also be used as a preliminary step before solving the system using the

Chebyshev acceleration routine, PIM CHEBYSHEV.

CGNR and CGNE For nonsymmetric systems, one could use the CG formulation applied

to systems involving either AT A or AAT ; these are called CGNR and CGNE respectively. The

dierence between both methods is that CGNR minimises the residual jj b ; Axk jj2 and CGNE

the error jj A;1b ; xk jj2. A potential problem with this approach is that the condition number

of AT A or AAT is large even for a moderately ill-conditioned A, thus requiring a substantial

number of iterations for convergence. However, as noted by Nachtigal et al. [37], CGNR is better

than GMRES and CGS for some systems, including circulant matrices. More generally, CGNR

and CGNE perform well if the eigenvalue spectrum of A has some symmetries; examples of such

matrices are the real skew-symmetric and shifted skew-symmetric matrices A = ei (T + I ),

T = T H , real and complex.

Bi-CG Bi-CG is a method derived to solve non-Hermitian systems of equations, and is closely

related to the Lanczos method to compute the eigenvalues of A. The method requires few vectors per iteration and the computation of a matrix-vector product as well as a transposematrix-vector product AT u. The iterates of Bi-CG are generated in the Krylov subspace

K(r0; A) = fr0 ; r0A; r0 A2 ; : : :g, where r0 = b ; Ax0.

9

A Galerkin condition wH rk = 0 , 8 w 2 K(~r0 ; AT ), is imposed on the residual vector where

r~0 is an arbitrary vector satisfying rkT r~0 6= 0. It is important to note that two sequences of

residual vectors are generated, one involving rk and A and the other r~k and AT but the solution

vector xk is updated using only the rst sequence.

Bi-CG has an erratic convergence with large oscillations of the residual 2-norm which usually

cause a large number of iterations to be performed until convergence is achieved. Moreover,

the method may break down, for example, the iterations cannot proceed when some quantities

(dependent on r~0 ) become zero1.

CGS CGS is a method that tries to overcome the problems of Bi-CG. By rewriting some of

the expressions used in Bi-CG, it is possible to eliminate the need for AT altogether. Sonneveld

[44] also noted that it is possible to (theoretically) increase the rate of convergence of Bi-CG at

no extra work per iteration. However, if Bi-CG diverges for some system, CGS diverges even

faster. It is also possible that CGS diverges while Bi-CG does not for some systems.

Bi-CGSTAB Bi-CGSTAB is a variant of Bi-CG with a similar formulation to CGS. However,

steepest-descent steps are performed at each iteration and these contribute to a considerably

smoother convergence behaviour than that obtained with Bi-CG and CGS. It is known that for

some systems Bi-CGSTAB may present an erratic convergence behaviour as does Bi-CG and

CGS.

RBi-CGSTAB The restarted Bi-CGSTAB, proposed by Sleijpen and Fokkema [43], tries to

overcome the stagnation of the iterations of Bi-CGSTAB which occurs with a large class of

systems of linear equations. The method combines the restarted GMRES method and Bi-CG,

being composed of two specic sections: a Bi-CG part where (l +1) u and r vectors are produced

(l being usually 2 or 4), and a minimal residual step follows, when the residuals are minimized.

RBi-CGSTAB is mathematically equivalent to Bi-CGSTAB if l = 1, although numerically their

iterations will usually dier. The method does not require the computation of transpose matrixvector products as in Bi-CG and a smaller number of vectors need to be stored per iteration

than for other restarted methods like GMRES.

GMRES The GMRES method is a very robust method to solve nonsymmetric systems.

The method uses the Arnoldi process to compute an orthonormal basis fv1 ; v2 ; : : : ; vk g of the

Krylov subspace K(A; v1 ). The solution of the system is taken as x0 + Vk yk where Vk is a

matrix whose columns are the orthonormal vectors vi , and yk is the solution of the leastsquares problem Hk yk = jj r0 jj2e1 , where the upper Hessenberg matrix Hk is generated during

1 The PIM implementation of Bi-CG, CGS and Bi-CGSTAB sets r~0 = r0 but the user may modify the code if

another choice of r~0 is desirable.

10

the Arnoldi process and e1 = (1; 0; 0; : : : ; 0)T . This least-squares problem can be solved using a

QR factorisation of Hk .

A problem that arises in connection with GMRES is that the number of vectors of order n that need to be stored grows linearly with k and the number of multiplications grows

quadratically. This may be avoided by using a restarted version of GMRES; this is the method

implemented in PIM. Instead of generating an orthonormal basis of dimension k, one chooses a

value c, c n, and generates an approximation to the solution using an orthonormal basis of

dimension c, thereby reducing considerably the amount of storage needed. Although the restarted GMRES does not break down [42, pp. 865], it may, depending on the system and the value

of c, produce a stationary sequence of residuals, thus not achieving convergence. Increasing the

value of c usually cures this problem and may also increase the rate of convergence.

A detailed explanation of the parallel implementation of the restarted GMRES used can be

found in [14].

GMRES with eigenvalues estimation It is very easy to obtain estimates of the eigenvalues

of Q1AQ2 at each iteration of GMRES, since the upper Hessenberg matrix Hk computed during

the Arnoldi process satises Q1AQ2 Vk = Vk Hk . The eigenvalues of Hk approximate those of

Q1AQ2 , especially on the boundaries of the region containing (Q1AQ2 ). The QR algorithm

can be used to obtain the eigenvalues of Hk . The LAPACK routine HSEQR [1, pp. 158-159] is

used for this purpose.

The routine PIM RGMRESEV returns a box in the complex plane, dening the minimum and

maximum values along the real and imaginary axes. These values can then be used by the

Chebyshev acceleration routine, PIM CHEBYSHEV.

GCR The GCR method is generally used in its restarted form for reasons similar to those

given above for GMRES. It is mathematically equivalent to the restarted version of GMRES

but it is not as robust. It is applicable to systems where the coecient matrix is of the form

A = I + R, complex and R real symmetric and A = I + S , real and S H = ;S , arising

in electromagnetics and quantum chromodynamics applications respectively [4].

QMR The QMR method by Freund and Nachtigal [27] overcomes the diculties associated

with the Bi-CG method. The original QMR algorithm uses the three-term recurrences as found

in the underlying Lanczos process. In [8], Bucker and Sauren propose a highly parallel algorithm

for the QMR method, with a single global synchronization point, which is implemented in this

version of PIM.

TFQMR TFQMR is a variant of CGS proposed by Freund [24]. TFQMR uses all available

search direction vectors instead of the two search vectors used in CGS. Moreover, these vectors

11

are combined using a parameter which can be obtained via a quasi-minimisation of the residual.

The method is thus extremely robust and has the advantage of not requiring the computation of

transpose matrix-vector products. PIM oers TFQMR with 2-norm weights (see [24, Algorithm

5.1]).

Chebyshev acceleration The Chebyshev acceleration is a polynomial acceleration applied

to basic stationary methods of the form

xk+1 = Gxk + f

where G = I ; Q1 A, f = Q1b. If we consider

k iterations of the above method, the iterates

xk may be linearly combined such that y = Pkj=0 cj xj is a better approximationPto x = A;1 b.

The coecients cj are chosen so that the norm of error vector is minimized and kj=0 cj = 1. If

we assume that the eigenvalues of G are contained in an interval [; ], with ;1 < < 1,

then the Chebyshev polynomials satisfy the above conditions on the cj 's. We refer the user to

[32, pp. 45{58, 332{339] for more details.

The Chebyshev acceleration has the property that its iterates can be expressed by short

(three-term) recurrence relations and, especially for parallel computers, no inner-products or

vector norms are needed (except for the stopping test). The diculty associated with the

Chebyshev acceleration is the need for good estimates either for the smallest or largest eigenvalues of G if the eigenvalues are real, or in the case of a complex eigenspectrum a region in

the complex plane containing the eigenvalues of minimum and maximum modulus.

With PIM, the user may make use of two routines, PIM CGEV and PIM RGMRESEV, to obtain

such estimates. PIM CGEV covers the case where the eigenvalues of G are real; for the complex

case, PIM RGMRESEV should be used. To obtain appropriately accurate estimates, these routines

must be used with left-preconditioning, and should be allowed to run for several iterations. The

estimates for the eigenvalues of Q1 A should then be modied to those of I ; Q1A. This is done

by replacing the smallest and largest real values, r and s, by 1 ; s and 1 ; r respectively. The

imaginary values should not be modied.

We note that even if A has only real eigenvalues, G may have complex (or imaginary

only) eigenvalues. In this latter case, the Chebyshev acceleration is dened in terms of a

minimum bounding ellipse that contains the eigenvalues of G. If we obtain a box [r; s; t; u]

where r Re((G)) s and t Im((G)) u, then the axes of this ellipse are dened as

p

p

p = 2(r + s)=2; q = 2(t + u)=2

These parameters for the Chebyshev iteration are computed by PIM CHEBYSHEV. An example of

the use of this routine may be found in Section 4.5.

For nonsymmetric systems, one may use a combination of the routine PIM RGMRESEV and

PIM CHEBYSHEV as proposed by Elman et al. as a hybrid method [21, page 847].

12

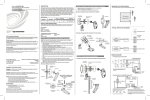

Figure 1: Selecting an iterative method.

Symmetric matrix?

Y

N

Eigenvalue

estimation?

Y

CGEV

Eigenvalue

estimation?

N

CG

CHEBYSHEV

Y

RGMRESEV

N

Transpose

matrix-vector product

available?

Y

Notes:

(1) only for mildly nonsymmetric systems

(2) use a small restarting

value if storage is at

a premium

Bi-CG

CGNR

CGNE

QMR

N

CG (1)

CGS

Bi-CGSTAB

RBi-CGSTAB

TFQMR

RGMRES (2)

RGCR (2)

CHEBYSHEV

To conclude this section, Figure 1 shows a diagram to aid in the selection of an iterative

method.

3 Internal details of PIM

3.1 Supported architectures and environments

PIM has been tested on scalar, vector and parallel computers including the Cray Y-MP2E/232,

Cray Y-MP C90/16256, SGI Challenge, Intel Paragon, TMC CM-52 and networks of workstations under PVM 3.3.6, CHIMP/MPI v1.2, Argonne MPI, p4 v1.2, TCGMSG 4.02 and Intel

NX. Table 1 lists the architectures and environments on which PIM has been successfully tested.

2 The results obtained are based upon a beta version of the software and, consequently, is not necessarily

representative of the performance of the full version of this software.

13

Table 1: Computers where PIM has been tested

Architecture

Sun SPARC

Sun SPARC

Sun SPARC

Sun SPARC

Sun SPARC

DEC AXP 4000/610

DEC AXP 3000/800

SGI IRIS Indigo

SGI IRIS Crimson

SGI Indy II

Cray Y-MP2E/232

Cray Y-MP C90/16256

SGI Challenge

Intel Paragon XP/S

IBM 9076 SP/1

Cray T3D

TMC CM-5

Compiler and O/S

Sun Fortran 1.4 - SunOS 4.1.3

Sun Fortran 2.0.1 - SunOS 5.2

EPC Fortran 77 - SunOS 4.1.3

EPC Fortran 90 - SunOS 4.1.3

NAG Fortran 90 - SunOS 4.1.3

DEC Fortran 3.3-1 - DEC OSF/1 1.3

DEC Fortran 3.4-480 - DEC OSF/1 2.0

MIPS Fortran 4.0.5 - SGI IRIX 4.0.5F

MIPS Fortran 4.0.5 - SGI IRIX 4.0.5C

MIPS Fortran 5.0 - SGI IRIX 5.1.1

Cray Fortran 6.0 - UNICOS 7.0.5.2

Cray Fortran 7.0 - UNICOS 8.2.3

MIPS Fortran 5.2 - SGI IRIX 5.2

Portland if77 4.5 - OSF/1 1.2.6

IBM XL Fortran 6000 2.3 - AIX 3.2

Cray Fortran 8.0 - UNICOS 8.3.3

CM Fortran 77

3.2 Parallel programming model

PIM uses the Single Program, Multiple Data (SPMD) programming model. The main implication of using this model is that certain scalar values are needed in each processing element (PE).

Two of the user-supplied routines, to compute a global sum and a vector norm, must provide

for this, preferably making use of a reduction and/or broadcast routine like those present on

PVM 3.3.6 and MPI.

3.3 Data partitioning

With PIM, the iterative method routines have no knowledge of the way in which the user has

chosen to store and access either the coecient or the preconditioning matrices. We thus restrict

ourselves to partitioning the vectors.

The assumption made is that each PE knows the number of elements of each vector stored

in it and that all vector variables in a processor have the same number of elements. This is

a broad assumption that allows us to accommodate many dierent data partitioning schemes,

including contiguous, cyclic (or wrap-around) and scattered partitionings. We are able to make

14

this assumption because the vector-vector operations used { vector accumulations, assignments

and copies { are disjoint element-wise. The other operations used involving matrices and vectors

which may require knowledge of the individual indices of vectors, are the responsibility of the

user.

PIM requires that the elements of vectors must be stored locally starting from position

1; thus the user has a local numbering of the variables which can be translated to a global

numbering if required. For example, if a vector of 8 elements is partitioned in wrap-around

fashion among 2 processors, using blocks of length 1, then the rst processor stores elements

1, 3, 5 and 7 in the rst four positions of an array; the second processor then stores elements

2, 4, 6 and 8 in positions 1 to 4 on its array. We stress that for most of the commonly used

partitioning schemes data may be retrieved with very little overhead.

3.4 Increasing the parallel scalability of iterative methods

One of the main causes for the poor scalability of implementations of iterative methods

on

Pn

T

distributed-memory computers is the need to compute inner-products, = u v = i=1 ui vi ,

where u and v are vectors distributed across p processors (without loss of generality assume

that each processor holds n=p elements of each vector). This computation can be divided in

three parts

1. The local computation of partial sums of the form j = Pn=p

i=1 ui vi , on each processor,

2. The reduction of the j values, where these values travel across the processors in some

ecient way (for instance, as if traversing a binary-tree

up to its root) and are summed

P

during the process. At the end, the value of = pj=1 j is stored in a single processor,

3. The broadcast of to all processors.

During parts 2. and 3. a number of processors are idle for some time. A possible strategy to

reduce this idle time and thus increase the scalability of the implementation, is to re-arrange

the operations in the algorithm so that parts 2. and 3. accumulate a number of partial sums

corresponding to some inner-products. Some of the algorithms available in PIM, including

CG, CGEV, Bi-CG, CGNR and CGNE have been rewritten using the approach suggested by

D'Azevedo and Romine [15]. Others, like Bi-CGSTAB, RBi-CGSTAB, RGCR, RGMRES and

QMR have not been re-arranged but some or all of their inner-products can be computed with

a single global sum operation.

The computation of the last two parts depends on the actual message-passing library being

used. With MPI, parts 2. and 3. are also oered as a single operation called MPI ALLREDUCE.

Applications using the PVM 3.3.6 Fortran interface should however call PVMFREDUCE and then

PVMFBROADCAST.

15

An important point to make is that we have chosen modications to the iterative methods

that reduce the number of synchronization points while at the same time maintaining their

convergence properties and numerical qualities. This is the case of the D'Azevedo and Romine

modication; also, in the specic case of GMRES, which uses the Arnoldi process (a suitable

reworking of the modied Gram-Schmidt procedure) to compute a vector basis, the computation

of several inner-products with a single global sum does not compromise numerical stability.

For instance, in the algorithm for the restarted GMRES (see Algorithm A.9), step 5 involves

the computation of j inner-products of the form ViT Vj , i = 1; 2; : : : ; j . It is thus possible to

arrange for each processor to compute j partial sums using the BLAS routine DOT and store

these in an array. Then in a single call to a reduction routine, these arrays are communicated

among the processors and their individual elements are summed. On the completion of the

global sum the array containing the respective j inner-products is stored in a single processor

and is then broadcast to the remaining processors.

The CGS and TFQMR implementations available on PIM do not benet from this approach.

3.5 Stopping criteria

PIM oers a number of stopping criteria which may be selected by the user. In Table 2 we

list the dierent criteria used; rk = b ; Axk is the true residual of the current estimate xk ,

zk is the pseudo-residual (usually generated by linear recurrences and possibly involving the

preconditioners) and " is the user-supplied tolerance. Note that the norms are not indicated;

these depend on the user-supplied routine to compute a vector norm.

Table 2: Stopping criteria available on PIM

No.

1

2

3

4

5

6

7

Stopping criterion

jj rk jj < "

jjqrk jj < "jj b jj

rkT zk < "jj b jj

jj zk jj < "

jj zk jj < "jj b jj

jj zk jj < "jj Q1b jj

jj xk ; xk;1 jj < "

If speed of execution is of the foremost importance, the user needs to select the stopping

criterion that will impose the minimum overhead. The following notes may be of use in the

selection of an appropriate stopping criterion

16

1. If the stopping criterion selected is one of 1, 2 or 3 then the true residual is computed

(except when using TFQMR with either no preconditioning or left preconditioning).

2. The restarted GMRES method uses its own stopping criterion (see [42, page 862]) which

is equivalent to the 2-norm of the residual (or pseudo-residual if preconditioning is used).

3. If either no preconditioning or right-preconditioning is used and criterion 6 is selected,

the PIM iterative method called will ag the error and exit without solving the system

(except for the restarted GMRES routine).

4 Using PIM

4.1 Naming convention of routines

The PIM routines have names of the form

PIM method

where indicates single-precision (S), double-precision (D), complex (C) or double-precision

complex (Z) and method is one of: CG, CGEV (CG with eigenvalue estimation), CGNR, CGNE,

BICG, CGS, BICGSTAB, RBICGSTAB, RGMRES, RGMRESEV (RGMRES with eigenvalue estimation),

and RGCR, QMR, TFQMR and CHEBYSHEV.

4.2 Obtaining PIM

PIM 2.0 is available via anonymous ftp from

unix.hensa.ac.uk, le /pub/misc/netlib/pim/pim22.tar.Z

and

ftp.mat.ufrgs.br, le /pub/pim/pim22.tar.gz

There is also a PIM World-Wide-Web homepage which can be accessed at

http://www.mat.ufrgs.br/pim-e.html

which gives a brief description of the package and allows the reader to download the software

and related documentation.

The current distribution contains

The PIM routines in the directories single, double, complex and dcomplex

A set of example programs for sequential and parallel execution (using PVM and MPI)

in the directories examples/sequential, examples/pvm and examples/mpi,

This guide in PostScript format in the doc directory.

17

4.3 Installing PIM

To install PIM, unpack the distributed compressed (or gzipped), tar le:

uncompress pim22.tar.Z (or gunzip pim22.tar.gz)

tar xfp pim22.tar

cd pim

and edit the Makefile. The following variables may need to be modied

HOME Your top directory, e.g., /u1/users/fred

FC Your Fortran compiler of choice, usually f77

FFLAGS Flags for the Fortran compilation of main programs (example programs)

OFFLAGS Flags for the Fortran compilation of separate modules (PIM routines and modules of

examples)

NOTE: This must include at least the ag required for separate compilation (usually -c)

AR The archiver program, usually ar

HASRANLIB Either t (true) or f (false), indicating if it is necessary to use a random library

program (usually ranlib) to build the PIM library

BLASLIB Either the name of an archive le containing the BLAS library or -lblas if the library

libblas.a has been installed on a system-wide basis

PARLIB The compilation switches for any required parallel libraries. This variable must be left

blank if PIM is to be used in sequential mode. For example, if PVM 3 is to be used, then

PARLIB would be dened as

-L$(PVM ROOT)/lib/$(PVM ARCH) -lfpvm3 -lpvm3 -lgpvm3

Each iterative method routine is stored in a separate le with names in lower case following the naming convention of the routines, e.g., the routine PIMDCG is stored in the le

pim22/double/pimdcg.f.

Building the PIM core functions PIM needs the values of some machine-dependent

oating-point constants. The single- or double-precision values are stored in the les

pim22/common/smachcons.f and pim22/common/dmachcons.f respectively. Default values are

supplied for the IEEE-754 oating-point standard, and are stored separately in the les

pim/common/smachcons.f.ieee754 and pim/common/dmachcons.f.ieee754 { these are used

by default. However if you are using PIM on a computer which does not support the IEEE-754

standard, you may:

18

1. type make smachcons or make dmachcons; this will compile and execute a program which

uses the LAPACK routine LAMCH, to compute those constants, and the relevant les will

be generated.

2. edit either pim/common/smachcons.f.orig or pim/common/dmachcons.f.orig and replace the strings MACHEPSVAL, UNDERFLOWVAL and OVERFLOWVAL by the values of the machine epsilon, underow and overow thresholds to those of the particular

computer you are using, either in single- or double-precision.

To build PIM, type make makefiles to build the makeles in the appropriate directories

and then make single, make double, make complex or make dcomplex to build the singleprecision, double-precision, complex or double complex versions of PIM. This will generate .o

les, one for each iterative method routine, along with the library le libpim.a which contains

the support routines.

Building the examples Example programs are provided for sequential use, and for parallel

use with MPI and PVM3 .

The example programs require a timing routine. The distribution comes with the le

examples/common/timer.f which contains examples of the timing functions available on the

Cray, the IBM RS/6000 and also the UNIX etime function. By default, the latter is used; this

le must be modied to use the timing function available on the target machine.

The PVM and MPI example programs use the Fortran INCLUDE statement to include the

PVM and MPI header les. Some compilers have a switch (usually -I) which allows the user

to provide search directories in which les to be included are located (as with the IBM AIX

XL Fortran compiler); while others require the presence of those les in the same directory as

the source code resides. In the rst case, you will need to include in the FFLAGS variable the

relevant switches (see x4.3); in the latter, you will need to install the PVM and MPI header

les (fpvm3.h and mpif.h respectively) by typing

make install-pvm-include INCFILE=<name-of-fpvm3.h>

make install-mpi-include INCFILE=<name-of-mpif.h>

where you should replace <name-of-fpvm3.h> and <name-of-mpif.h> by the full lename of

the required include les; for instance, if PVM is installed on /usr/local/pvm3 then you should

type

make install-pvm-include INCFILE=/usr/local/pvm3/include/fpvm3.h

Figure 2 shows the directory tree containing the examples. To build them, type make

followed by the name of a subdirectory of examples, e.g., make sequential/single/dense.

3 The PVM examples use the \groups" library libgpvm.a which provides the reduction functions.

19

Figure 2: Directories containing the examples.

single

sequential

double

complex

dcomplex

examples

double

dense

pde

dense

pde

complex

dcomplex

dense

dense

single

pvm

mpi

dense

pde

pvp-pde

harwell-boeing

dense

pde

harwell-boeing

dense

dense

The example programs can also be built locally in those directories by changing to a specic

directory and typing make.

Cleaning-up You may wish to remove some or all of the compiled codes or other les installed

under the PIM directory; in this case you may type one of the following

make

make

make

make

make

make

make

make

make

make

make

make

singleclean

doubleclean

complexclean

dcomplexclean

sequentialclean

pvmclean

mpiclean

clean-pvm-include

clean-mpi-include

examplesclean

makefilesclean

realclean

which will clean-up the PIM routines, the examples, the Makeles, the include les and all

generated les, returning the package to its distribution form.

20

Using PIM in your application To use PIM with your application, link your program with

the .o le corresponding to the PIM iterative method routine being called and with the PIM

support library libpim.a.

4.4 Calling a PIM iterative method routine

With the exception of the Bi-CG, CGNR, CGNE and QMR methods, all the implemented

methods have the same parameter list as CG. The argument list for the double-precision implementation of the CG method is

SUBROUTINE PIMDCG(X,B,WRK,IPAR,DPAR,MATVEC,PRECONL,PRECONR,

+

PDSUM,PDNRM,PROGRESS)

and for Bi-CG (as well as for CGNR, CGNE and QMR)

SUBROUTINE PIMDBICG(X,B,WRK,IPAR,DPAR,MATVEC,TMATVEC,PRECONL,PRECONR,

PDSUM,PDNRM,PROGRESS)

+

where the parameters are as follows

Parameter Description

X

A vector of length IPAR(4)

On input, contains the initial estimate

On output, contains the last estimate computed

B

The right-hand-side vector of length IPAR(4)

WRK

A work vector used internally (see the description

of each routine for its length)

IPAR

An integer array containing input-output parameters

PAR

A oating-point array containing input-output parameters

MATVEC

Matrix-vector product external subroutine

TMATVEC

Transpose-matrix-vector product external subroutine

PRECONL

Left-preconditioning external subroutine

PRECONR

Right-preconditioning external subroutine

P SUM

Global sum (reduction) external function

P NRM

Vector norm external function

PROGRESS

Monitoring routine

Note in the example above that, contrary to the proposal in [5], PIM uses separate routines

to compute the matrix-vector and transpose-matrix-vector products. See the reference manual,

sections A.1 and A.2 for the description of the parameters above and the synopsis of the external

routine.

21

4.5 External routines

As stated earlier, the user is responsible for supplying certain routines to be used internally by

the iterative method routines. One of the characteristics of PIM is that if external routines are

not required by an iterative method routine they are not called (the only exception being the

monitoring routines). The user only needs to provide those subroutines that will actually be

called by an iterative method routine, depending on the selection of method, preconditioners

and stopping criteria; dummy parameters may be passed in place of those that are not used.

Some compilers may require the presence of all routines used in the program during the linking

phase of the compilation; in this case the user may need to provide stubs for the dummy

routines. Section A.2 gives the synopsis of each user-supplied external routine used by PIM.

The external routines have a xed parameter list to which the user must adhere (see xA.2).

Note that (from version 2.2 onwards) the coecient and the preconditioning matrices do not

appear in the parameter list of the PIM routines. Indeed we regard the matrix-vector products

and preconditioning routines as operators returning only the appropriate resulting vector; thus

the PIM routines have no knowledge of the way in which the matrices are stored.

The external routines, however, may access the matrices declared in the main program via

COMMON blocks. This strategy hides from the PIM routines details of how the matrices are

declared in the main program and thus allows the user to choose the most appropriate storage

method for her problem; previous versions of PIM were more restrictive in this sense.

Matrix-vector product Consider as an example a dense matrix partitioned by contiguous

columns among a number of processors. For illustrative purposes we assume that N is an integer

multiple of NPROCS, and that LOCLEN=N/NPROCS. The following code may then be used

PROGRAM MATV

* A IS DECLARED AS IF USING A COLUMN PARTITIONING FOR AT LEAST

* TWO PROCESSORS.

INTEGER LDA

PARAMETER (LDA=500)

INTEGER LOCLEN

PARAMETER (LOCLEN=250)

DOUBLE PRECISION A(LDA,LOCLEN)

COMMON /PIMA/A

* SET UP PROBLEM SOLVING PARAMETERS FOR USE BY USER DEFINED ROUTINES

* THE USER MAY NEED TO SET MORE VALUES OF THE IPAR ARRAY

* LEADING DIMENSION OF A

IPAR(1)=LDA

* NUMBER OF ROWS/COLUMNS OF A

IPAR(2)=N

* NUMBER OF PROCESSORS

22

IPAR(6)=NPROCS

* NUMBER OF ELEMENTS STORED LOCALLY

IPAR(4)=N/IPAR(6)

* CALL PIM ROUTINE

CALL PIMDCG(X,B,WRK,IPAR,DPAR,MATVEC,PRECONL,PRECONR,PDSUM,PDNRM,PROGRESS)

STOP

END

* MATRIX-VECTOR PRODUCT ROUTINE CALLED BY A PIM ROUTINE. THE

* ARGUMENT LIST TO THIS ROUTINE IS FIXED.

SUBROUTINE MATVEC(U,V,IPAR)

DOUBLE PRECISION U(*),V(*)

INTEGER IPAR(*)

INTEGER LDA

PARAMETER (LDA=500)

INTEGER LOCLEN

PARAMETER (LOCLEN=250)

DOUBLE PRECISION A(LDA,LOCLEN)

COMMON /PIMA/A

.

.

.

RETURN

END

The scheme above can be used for the transpose-matrix-vector product as well. We note that

many dierent storage schemes are available for storing sparse matrices; the reader may nd

useful to consult Barrett et al. [6, pp. 57] where such schemes as well as algorithms to compute

matrix-vector products are discussed.

Preconditioning For the preconditioning routines, one may use the scheme outlined above

for the matrix-vector product; in some cases this may not be necessary, when there is no need

to operate with A or the preconditioner is stored as a vector. An example is the diagonal (or

Jacobi) left-preconditioning, where Q1 = diag(A);1

PROGRAM DIAGP

INTEGER LDA

PARAMETER (LDA=500)

INTEGER LOCLEN

PARAMETER (LOCLEN=250)

* Q1 IS DECLARED AS A VECTOR OF LENGTH 250, AS IF USING AT LEAST

* TWO PROCESSORS.

DOUBLE PRECISION A(LDA,LOCLEN),Q1(LOCLEN)

COMMON /PIMQ1/Q1

EXTERNAL MATVEC,DIAGL,PDUMR,PDSUM,PDNRM

23

* SET UP PROBLEM SOLVING PARAMETERS FOR USE BY USER DEFINED ROUTINES

* THE USER MAY NEED TO SET MORE VALUES OF THE IPAR ARRAY

* LEADING DIMENSION OF A

IPAR(1)=LDA

* NUMBER OF ROWS/COLUMNS OF A

IPAR(2)=N

* NUMBER OF PROCESSORS

IPAR(6)=NPROCS

* NUMBER OF ELEMENTS STORED LOCALLY

IPAR(4)=N/IPAR(6)

* SET LEFT-PRECONDITIONING

IPAR(8)=1

.

.

.

DO 10 I=1,N

Q1(I)=1.0D0/A(I,I)

10 CONTINUE

.

.

.

CALL DINIT(IPAR(4),0.0D0,X,1)

CALL PIMDCG(X,B,WRK,IPAR,DPAR,MATVEC,DIAGL,PDUMR,PDSUM,PDNRM,PROGRESS)

STOP

END

.

.

.

SUBROUTINE DIAGL(U,V,IPAR)

DOUBLE PRECISION U(*),V(*)

INTEGER IPAR(*)

INTEGER LOCLEN

PARAMETER (LOCLEN=250)

DOUBLE PRECISION Q1(LOCLEN)

COMMON /PIMQ1/Q1

CALL DCOPY(IPAR(4),U,1,V,1)

CALL DVPROD(IPAR(4),Q1,1,V,1)

RETURN

where DVPROD is a routine based on the BLAS DAXPY routine that performs an element-byelement vector multiplication. This example also shows the use of dummy arguments (PDUMR).

Note that it is the responsibility of the user to ensure that, when using preconditioning, the

matrix Q1AQ2 must satisfy any requirements made by the iterative method being used with

respect to the symmetry and/or positive-deniteness of the matrix. For example, if A is a matrix

with arbitrary (i.e., non-constant) diagonal entries, then both diag(A);1 A and A diag(A);1 will

not be symmetric, and the CG and CGEV methods will generally fail to converge. For these

methods symmetric preconditioning, diag(A);1=2 A diag(A);1=2, should be used.

Inner-products, vector norms and global accumulation When running PIM routines

on multiprocessor architectures, the inner-product and vector norm routines require reduction

24

and broadcast operations (in some message-passing libraries these can be supplied by a single

routine). On vector processors these operations are handled directly by the hardware whereas

on distributed-memory architectures these operations involve the exchange of messages among

the processors.

When a PIM iterative routine needs to compute an inner-product, it calls DOT to compute

the partial inner-product values. The user-supplied routine P SUM is then used to generate the

global sum of those partial sums. Thepfollowing code shows the routines to compute the global

sum and the vector 2-norm jj u jj2 = uT u using the BLAS DDOT routine and the reductionplus-broadcast operation provided by MPI

SUBROUTINE PDSUM(ISIZE,X,IPAR)

INCLUDE 'mpif.h'

INTEGER ISIZE

DOUBLE PRECISION X(*)

DOUBLE PRECISION WRK(10)

INTEGER IERR,IPAR(*)

EXTERNAL DCOPY,MPI_ALLREDUCE

CALL MPI_ALLREDUCE(X,WRK,ISIZE,MPI_DOUBLE_PRECISION,MPI_SUM,

+

MPI_COMM_WORLD,IERR)

CALL DCOPY(ISIZE,WRK,1,X,1)

RETURN

END

DOUBLE PRECISION FUNCTION PDNRM(LOCLEN,U,IPAR)

INCLUDE 'mpif.h'

INTEGER LOCLEN

DOUBLE PRECISION U(*)

DOUBLE PRECISION PSUM

INTEGER IERR,IPAR(*)

DOUBLE PRECISION DDOT

EXTERNAL DDOT

INTRINSIC SQRT

DOUBLE PRECISION WRK(1)

EXTERNAL MPI_ALLREDUCE

PSUM = DDOT(LOCLEN,U,1,U,1)

CALL MPI_ALLREDUCE(PSUM,WRK,1,MPI_DOUBLE_PRECISION,MPI_SUM,

+

MPI_COMM_WORLD,IERR)

PDNRM = SQRT(WRK(1))

RETURN

END

It should be noted that P SUM is actually a wrapper to the global sum routines available on

25

a particular machine. Also, when executing PIM on a sequential computer, these routines are

empty i.e., the contents of the array X must not be altered in any way since its elements already

are the inner-product values.

The parameter list for these routines was decided upon after inspecting the format of the

global operations available from existing message-passing libraries.

Monitoring the iterations In some cases, most particularly when selecting the iterative

method to be used for solving a specic problem, it is important to be able to obtain feedback

from the PIM routines as to how an iterative method is progressing.

To this end, we have included in the parameter list of each iterative method routine an

external subroutine (called PROGRESS) which receives from that routine the number of vector elements stored locally (LOCLEN), the iteration number (ITNO), the norm of the residual (NORMRES)

(according to the norm being used), the current iteration vector (X), the residual vector (RES)

and the true residual vector rk = b ; Axk , (TRUERES). This last vector contains meaningful

values only if IPAR(9) is 1, 2 or 3.

The parameter list of the monitoring routine is xed, as shown in xA.2. The example below

shows a possible use of the monitoring routine, for the DOUBLE PRECISION data type.

SUBROUTINE PROGRESS(LOCLEN,ITNO,NORMRES,X,RES,TRUERES)

INTEGER LOCLEN,ITNO

DOUBLE PRECISION NORMRES

DOUBLE PRECISION X(*),RES(*),TRUERES(*)

EXTERNAL PRINTV

WRITE (6,FMT=9000) ITNO,NORMRES

WRITE (6,FMT=9010) 'X:'

CALL PRINTV(LOCLEN,X)

WRITE (6,FMT=9010) 'RESIDUAL:'

CALL PRINTV(LOCLEN,RES)

WRITE (6,FMT=9010) 'TRUE RESIDUAL:'

CALL PRINTV(LOCLEN,TRUERES)

RETURN

9000 FORMAT (/,I5,1X,D16.10)

9010 FORMAT (/,A)

END

SUBROUTINE PRINTV(N,U)

INTEGER N

DOUBLE PRECISION U(*)

INTEGER I

DO 10 I = 1,N

WRITE (6,FMT=9000) U(I)

10 CONTINUE

RETURN

9000 FORMAT (4(D14.8,1X))

26

END

As with the other external routines used by PIM, this routine needs to be supplied by

the user; we have included the source code for the routine as shown above in the directory

/pim/examples/common and this may be used as is or can be modied by the user as required.

Please note that for large system sizes the routine above will produce very large amounts of

output. We stress that this routine is always called by the PIM iterative method routines; if no

monitoring is needed a dummy routine must be provide.

Note that some of the iterative methods contain an inner loop within the main iteration

loop. This means that, for PIM RGCR and PIM TFQMR, the value of ITNO passed to PROGRESS

will be repeated as many times as the inner loop is executed. We did not modify the iteration

number passed to PROGRESS so as to reect the true behaviour of the iterative method being

used.

4.6 Example programs

In the distributed software the user will nd a collection of example programs under the directory examples. The example programs show how to use PIM with three dierent matrix storage

formats including dense matrices, those derived from the ve-point nite-dierence discretisation of a partial dierential equation (PDE) and the standard sparse representation found in

the Harwell-Boeing sparse matrix collection [18].

Most of the examples are provided for sequential and parallel execution, the latter with

separate codes for PVM 3.3.6 and MPI libraries. The examples involving the Harwell-Boeing

sparse format are provided for sequential execution only.

The parallel programs for the dense and PDE storage formats have dierent partitioning

strategies and the matrix-vector products have been designed to take advantage of these.

The systems solved have been set-up such that the solution is the vector x = (1; 1; : : : ; 1)T ,

in order to help in checking the results. For the dense storage format, the real system has the

tridiagonal coecient matrix of order n = 500

2

6

6

6

A = 66

6

4

4 1

1 4 1

... ... ...

1 4 1

1 4

3

7

7

7

7

7

7

5

and the complex system of order n = 100 has the form A = I + S , where S = S H , = 4 ; 4i

and

27

S

2

6

6

6

= 66

6

4

1+i

1;i 0 1+i

... ...

1;i

3

7

7

7

7

7

7

5

0

...

0

(5)

1+i

1;i 0

The problem using the Harwell-Boeing format is NOS4 from the LANPRO collection of problems

in structural engineering [18, pp. 54-55]. Problem NOS4 has order n = 100 and is derived from a

nite-element approximation of a beam structure. For the PDE storage format the system being

solved is derived from the ve-point nite-dierence discretisation of the convection-diusion

equation

!

2u

2u

@

@

@u

; @x2 + @y2 + cos() @u

(6)

@x + sin() @y = 0

on the unit square, with = 0:1, = ;=6 and u = x2 + y2 on R. The rst order terms were

discretised using forward dierences (this problem was taken from [44]).

A dierent set of systems is used for the HYBRID examples with dense storage format. The

real system has a nonsymmetric tridiagonal coecient matrix of order n = 500

2

6

6

6

A = 66

6

4

2 ;1

2 2 ;1

... ... ...

2 2 ;1

2 2

and the complex system of order n = 100 has A dened as

2

6

6

6

A = 66

6

4

2 ;1 + i

2 + i 2 ;1 + i

...

...

2+i

3

7

7

7

7

7

7

5

3

7

7

7

7

7

7

5

...

2 ;1 + i

2+i 2

The examples include the solution of systems using dierent preconditioners. In the dense

and Harwell-Boeing formats the examples include diagonal and polynomial preconditioners; the

ve-point PDE format includes a variant of the incomplete LU factorisation and polynomial

preconditioners. The polynomial preconditioners provided are the Neumann and the weighted

and unweighted least-squares polynomials found in [36].

28

4.6.1 Eigenvalues estimation and Chebyshev acceleration

Consider the use of Chebyshev acceleration to obtain a solution to a linear system whose

coecient matrix has real entries only; the eigenvalues of the iteration matrix I ; Q1 A are

known to lie in the complex plane. We can use a few iterations of the routine PIMDRGMRESEV to

obtain estimates of the eigenvalues of Q1A and then switch to PIMDCHEBYSHEV. Before the latter

is called a transformation on the extreme values on the real axis must be made as described in

Section 2.

In the example below, we use the Jacobi preconditioner as shown in x4.5. Note that the

vector X returned by PIMDRGMRESEV may be used as an improved initial vector for the routine

PIMDCHEBYSHEV. Both routines are combined in a loop to produce a hybrid method; the code

below is based on the algorithm given by Elman et al. [21, page 847].

PROGRAM HYBRID

INTEGER MAXIT

EXTERNAL MATVEC,PRECON,PDUMR,PDSUM,PDNRM2

* SET MAXIMUM NUMBER OF ITERATIONS FOR THE HYBRID LOOP

MAXIT=INT(N/2)+1

* SET LEFT-PRECONDITIONING

IPAR(8)=1

CALL DINIT(N,0.0D0,X,1)

DO 10 I = 1,MAXIT

* SET SMALL NUMBER OF ITERATIONS FOR RGMRESEV

IPAR(10)=3

CALL PIMDRGMRESV(X,B,WRK,IPAR,DPAR,MATVEC,PRECONR,PDUMR,PDSUM,PDNRM,PROGRESS)

IF (IPAR(12).NE.-1) THEN

IPAR(11) = I

GO TO 20

END IF

*

*

*

*

MODIFY REAL INTERVAL TO REFLECT EIGENVALUES OF I-Q1A. BOX CONTAINING

THE EIGENVALUES IS RETURNED IN DPAR(3), DPAR(4), DPAR(5), DPAR(6),

THE FIRST TWO ARE THE INTERVAL ALONG THE REAL AXIS, THE LAST TWO ARE

THE INTERVAL ALONG THE IMAGINARY AXIS.

MU1 = DPAR(3)

MUN = DPAR(4)

DPAR(3) = 1.0D0 - MUN

DPAR(4) = 1.0D0 - MU1

* SET NUMBER OF ITERATIONS FOR CHEBYSHEV

IPAR(10)=5

CALL PIMDCHEBYSHEV(X,B,DWRK,IPAR,DPAR,MATVEC,PRECON,PDUMR,PDSUM,PDNRM2,PROGRESS)

IF ((IPAR(12).EQ.0) .OR. (IPAR(12).EQ.-6) .OR.

29

+

(IPAR(12).EQ.-7)) THEN

IPAR(11) = I

GO TO 20

END IF

10 CONTINUE

20 CONTINUE

.

.

.

4.6.2 Dense storage

For the dense case, the coecient matrix is partitioned by columns among the p processors,

which are considered to be logically connected on a grid (see Figure 3-A). Each processor stores

at most d n=p e columns of A. For the example shown in Figure 3-B, the portion of the matrixvector product to be stored in processor 0 is computed according to the diagram shown in

Figure 3-C. Basically, each processor computes a vector with the same number of elements as

that of the target processor (0 in the example) which holds the partial sums for each element.

This vector is then sent across the network to be summed in a recursive-doubling fashion until

the accumulated vectors, carrying the contributions of the remaining processors, arrive at the

target processor. These accumulated vectors are then summed together with the partial sum

vector computed locally in the target processor, yielding the elements of the vector resulting

from the matrix-vector product. This process is repeated for all processors. This algorithm is

described in [13].

To compute the dense transpose-matrix-vector product, AT u, each processor broadcasts to

the other processors a copy of its own part of u. The resulting part of the v vector is then

computed by each processor.

4.6.3 PDE storage

For the PDE storage format, a square region is subdivided into l + 1 rows and columns giving a

grid containing l2 internal points, each point being numbered as i +(j ; 1)l, i; j = 1; 2; : : : ; l (see

Figure 4). At each point we assign 5 dierent values corresponding to the center, north, south,

east and west points on the stencil (i;j , i;j , i;j , i;j , "i;j respectively) which are derived from

the PDE and the boundary conditions of the problem. Each grid point represents a variable;

the whole being obtained by solving a linear system of order n = l2 .

A matrix-vector product v = Au is obtained by computing

vi;j = i;j ui;j + i;j ui+1;j + i;j ui;1;j + i;j ui;j +1 + "i;j ui;j ;1

(7)

where some of the , , , and " may be zero according to the position of the point relative

to the grid. Note that only the neighbouring points in the vertical and horizontal directions are

needed to compute vi;j .

30

Figure 3: Matrix-vector product, dense storage format: A) Partitioning in columns , B) Example and C) Computation and communication steps.

Processors

A)

B)

G H

a

Aa+Bb+Cc+Dd+Ee+Ff+Gg+Hh

M N O P

b

Ia+Jb+Kc+Ld+Me+Nf+Og+Ph

A B

C D E

I

K L

J

F

c

.

=

d

.

e

f

.

g

h

C)

Aa+Bb+Cc+Dd+Ee+Ff+Gg+Hh

Ia+Jb+Kc+Ld+Me+Nf+Og+Ph

.

.

.

Cc+Dd+Gg+Hh

Kc+Ld+Og+Ph

Ee+Ff

Me+Nf

Step 1

Step 2

31

Gg+Hh

Og+Ph

Figure 4: Matrix-vector product, PDE storage format.

Processor 0

Processor 1

Processor 2

6

5

4

3

2

8

1

7

i

j

β

ε

α

δ

γ

Boundary grid points (exchanged)

Grid points

Data exchange

Five−point stencil

A parallel computation of (7) may be organised as follows. The grid points are partitioned

by vertical panels among the processors as shown in Figure 4. A processor holds at most d l=p e

columns of l grid points. To compute the matrix-vector product, each processor exchanges with

its neighbours the grid points in the \interfaces" between the processors (the points marked

with white squares in Figure 4). Equation (7) is then applied independently by each processor

at its local grid points, except at the local interfacing points. After the interfacing grid points

from the neighbouring processors have arrived at a processor, Equation (7) is applied using the

local interfacing points and those from the neighbouring processors.

This parallel computation oers the possibility of overlapping communication with the computation. If the number of local grid points is large enough, one may expect that while Equation

(7) is being applied to those points, the interfacing grid points of the neighbouring processors

will have been transferred and be available for use. This method attempts to minimize the

overheads incurred by transferring the data (note that we only make gains if the asynchronous

transfer of messages is available). The example below is taken from the matrix-vector product

routine using MPI

32

SUBROUTINE PDMVPDE(NPROCS,MYID,LDC,L,MYL,COEFS,U,V,UEAST,UWEST)

INCLUDE 'mpif.h'

* Declarations...

* Send border U values to (myid+1)-th processor

MSGTYPE = 1000

TO = MYID + 1

CALL MPI_ISEND(U(EI0),L,MPI_DOUBLE_PRECISION,TO,MSGTYPE,

+

MPI_COMM_WORLD,SID0,IERR)

* Post to receive border U values from (myid+1)-th processor

MSGTYPE = 1001

CALL MPI_IRECV(UEAST,L,MPI_DOUBLE_PRECISION,MPI_ANY_SOURCE,

+

MSGTYPE,MPI_COMM_WORLD,RID0,IERR)

* Send border U values to (myid-1)-th processor