Download AdeptVision VME User`s Guide Version 11.0

Transcript

AdeptVision VME

User’s Guide

Version 11.0

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

AdeptVision VME

User’s Guide

Version 11.0

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

,,,,,,,,,,,,,,,

@@@@@@@@@@@@@@@

ÀÀÀÀÀÀÀÀÀÀÀÀÀÀÀ

Part Number 00961-00430 Rev. A

July 1994

®

150 Rose Orchard Way • San Jose, CA 95134 • USA • Phone (408) 432-0888 • Fax (408) 432-8707

Otto-Hahn-Strasse 23 • 44227 Dortmund • Germany • Phone 0231/75 89 40 • Fax 0231/75 89 450

adept

technology, inc.

11, Voie la Cardon • 91126 • Palaiseau • France • Phone (1) 69.19.16.16 • Fax (1) 69.32.04.62

1-2, Aza Nakahara, Mitsuya-Cho • Toyohashi-Shi 441-31 • Japan • (0532) 65-2391 • Fax (0532) 65-2390

The information contained herein is the property of Adept Technology, Inc., and shall not be reproduced in

whole or in part without prior written approval of Adept Technology, Inc. The information herein is subject

to change without notice and should not be construed as a commitment by Adept Technology, Inc. This

manual is periodically reviewed and revised.

Adept Technology, Inc., assumes no responsibility for any errors or omissions in this document. Critical

evaluation of this manual by the user is welcomed. Your comments assist us in preparation of future

documentation. A form is provided at the back of the book for submitting your comments.

Copyright 1993, 1994 by Adept Technology, Inc. All rights reserved.

The Adept logo is a registered trademark of Adept Technology, Inc.

Adept, AdeptOne, AdeptThree, PackOne, HyperDrive,

A-Series, S-Series, Adept MC, Adept CC, Adept IC, Adept OC, Adept MV,

AdeptVision, VisionWare, AdeptMotion, MotionWare, AdeptForce, AIM,

V and V+ are trademarks of Adept Technology, Inc.

Any trademarks from other companies used in this publication

are the property of those respective companies.

Printed in the United States of America

TOC

Table of Contents

Introduction

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Compatibility

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

How to Use This Manual

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

5

6

6

6

6

6

6

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7

1.1

Introduction

1.2

What AdeptVision VME Is

.

.

.

.

.

.

.

.

.

.

.

. .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Physical Equipment . . . . . . .

Controller and Vision Processor

Robot or Motion Device . . . .

Graphics Terminal . . . . . . . .

User Equipment . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

8

8

. . 8

10

.

10

.

10

.

10

.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

11

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

12

Pixel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

The Camera Imaging Surface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Resolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

12

13

14

Summary of Software Tools

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

16

Boundary Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Rulers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

16

16

1.3

What AdeptVision VME Does

1.4

Vision Basics

1.5

.

.

.

.

.

.

.

.

.

.

.

.

.

Within the Continental United States . . . .

Service Calls . . . . . . . . . . . . . . .

Application Questions . . . . . . . . . .

Training Information . . . . . . . . . . .

Within Europe . . . . . . . . . . . . . . . . .

Outside Continental United States or Europe

Adept Bulletin Board Service (BBS) . . . .

Overview

2

2

3

3

4

4

4

Notes, Cautions, and Warnings

1

2

.

.

.

.

.

.

Organization . . . . . . . . . . . . . . . . . . . . . .

Before You Begin . . . . . . . . . . . . . . . . . . .

Related Manuals . . . . . . . . . . . . . . . . . . . .

Safety . . . . . . . . . . . . . . . . . . . . . . . . . .

Reading and Training for Users and Operators

System Safeguards . . . . . . . . . . . . . . . .

How Can I Get Help?

1

iii

AdeptVision VME User’s Guide

Inspection Windows

Finder Tools . . . . .

Processing Windows

Modeling . . . . . .

1.6

1.7

.

.

.

.

16

16

16

16

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

17

Frames . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

17

Things to Consider When Designing Your Workcell

. . . . . . . . . . . . . . .

17

.

.

.

.

17

17

17

18

.

.

.

.

.

.

.

.

Installation

2.1

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Overview of Guidance Vision

Consistent Environment

Ease of Maintenance . .

Safety . . . . . . . . . .

Lighting . . . . . . . . .

2

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3

Setting Up the Hardware

Setting Up the Software

Getting Started

V+ Syntax Conventions

3.2

Virtual Cameras

3.4

.

.

.

.

iv

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

19

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

20

.

.

.

.

.

.

.

.

20

20

20

21

21

21

21

22

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

22

. . . .

. . . .

. . . .

. . .

. . . .

. . . .

. . . .

. . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

25

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

26

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

27

What Is a Virtual Camera? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

How Are Camera Numbers Assigned? . . . . . . . . . . . . . . . . . . . . . . . . . .

Why Use Virtual Cameras? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

27

27

28

Camera Calibration

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

28

Camera Calibration Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

28

Motion Devices and Calibration

30

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

30

30

30

30

30

31

. . . . . . . . . . . . . . . . . . . . . . . . . . .

31

Motion Device Calibration . . . . . . . . . .

Start-up Calibration . . . . . . . . . . . . . .

Camera Calibration . . . . . . . . . . . . . .

The Vision Transformation . . . . . . . . . .

Fixed Mount Camera Transformation .

Robot-Mounted Camera Transformation

3.5

.

.

.

.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.1

3.3

.

.

.

.

.

.

.

.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Installing the Controller . . . . . . . . . .

Attaching Cameras and Strobes . . . . .

Strobe Compatibility . . . . . . . . .

Cameras Supported by AdeptVision VME

Panasonic GP-CD 40 . . . . . . . . .

Panasonic GP-MF 702 . . . . . . . .

Sony XC-77 . . . . . . . . . . . . . .

Mounting Cameras . . . . . . . . . . . . .

2.2

.

.

.

.

Loading Vision Calibration Data

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Table of Contents

4

Teaching AdeptVision to See

4.1

4.2

4.3

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

33

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

34

Physical vs. Virtual Cameras . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

The Point of Origin . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

34

35

VPICTURE—Getting an Image

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

35

VPICTURE Syntax . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

VPICTURE Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Executing VPICTURE From the Menu . . . . . . . . . . . . . . . . . . . . . . . . . .

36

36

36

VDISPLAY—Displaying the Image

37

Introduction

. . . . . . . . . . . . . . . . . . . . . . . . .

VDISPLAY Syntax . . . . . . . . . . .

VDISPLAY Examples . . . . . . .

Executing VDISPLAY From the Menu

Using the Different Display Modes . .

Live Modes . . . . . . . . . . . . .

Frame (Frozen) Modes . . . . . .

Graphics Mode . . . . . . . . . . .

.

.

.

.

.

.

.

37

37

38

38

38

38

38

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

39

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

41

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

42

4.4

Binary vs. Grayscale Modes

4.5

Switches and Parameters

4.6

Using Switches

4.8

5

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

44

Setting Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Parameter Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Image-Acquisition Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

44

44

44

Examples of Switch and Parameter Settings

. . . . . . . . . . . . . . . . . . . .

46

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

55

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

56

Using Parameters

Introduction

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Boundary Analysis Instructions

VLOCATE . . . . . . . . .

VLOCATE Examples

The DO Monitor Command

VFEATURE . . . . . . . .

What is VFEATURE?

Blob Allocation . . . .

VFEATURE Example

VQUEUE . . . . . . . . . .

. . .

. . .

. .

. . .

. . .

. . .

. . .

. . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. . . . . . . . . . . . . .

56

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

57

.

.

.

.

.

.

.

.

58

58

59

59

59

61

62

63

Switches and Parameters Used During Boundary Analysis

5.2

.

.

.

.

.

.

.

42

42

43

43

Boundary Analysis

5.1

.

.

.

.

.

.

.

.

.

.

.

Enabling/Disabling Switches

Viewing Switch Settings . .

SWITCH Example . . .

Image-Acquisition Switches

4.7

. . .

. . .

. .

. . .

. . .

. . .

. . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

v

AdeptVision VME User’s Guide

6

Vision Tools

6.1

Defining a Tool Area-of-Interest (AOI)

. . . . . . . . . . . . . . . . . . . . . . . .

Linear Rulers

66

66

67

68

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

71

6.4

6.6

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Arc Ruler Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

74

Ruler Types

77

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

77

77

77

77

77

78

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

78

VFIND.LINE Array . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Line Finder Tool Polarity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

VFIND.LINE Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

79

80

81

Processing Windows (VWINDOW)

. . . . . . . . . . . . . . . . . . . . . . . . . .

82

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

83

Finder Tools

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

84

Vision Tool Data Arrays

6.9

Windows, Windows, Windows

Introduction

.

.

.

.

.

.

84

6.8

Vision Model Processing

.

.

.

.

.

.

. . . . . . . . . . . . . . . . .

Vision Tools: Inspection Windows (VWINDOWI)

7.2

.

.

.

.

74

6.7

7.1

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

84

85

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

87

Why Use Prototype Recognition? . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Why Use Correlation? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Why Use OCR? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

87

87

88

Training Prototypes

88

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Creating Prototypes . . . .

Editing Prototypes . . . . .

Preview Window . . . . . .

Zoom Buttons . . . . . . . .

Message Window . . . . .

Edit Buttons . . . . . . . . .

Editing Operation Data Box

vi

.

.

.

.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Arc Rulers

VWINDOW Example

7

.

.

.

.

71

72

Standard Binary Rulers (type = 0) . . . .

Raw Binary Rulers (type = –1) . . . . . .

Dynamic Binary Rulers (type = –2) . . .

Graylevel Rulers (type = 1) . . . . . . . .

Fine Edge/Fine Pitch Rulers (type = 2/3)

Ruler Speed and Accuracy . . . . . .

6.5

.

.

.

.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

VRULERI Array

Linear Ruler Example

6.3

66

.

.

.

.

Frame Stores . . . . . . . . . . . . . . . . . .

Virtual Frame Buffers . . . . . . . . . .

Areas-of-Interest . . . . . . . . . . . . . . . .

Defining an Image Buffer Region . . .

6.2

65

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

88

90

92

92

92

92

92

Table of Contents

Edge/Region Data Boxes . .

Edge/Region Radio Buttons

Prototype Training Hints . .

Sub-Prototypes . . . . . . . .

Prototype Parameters . . . .

Setting Prototype Parameters

Verify Percent . . . . .

Effort Level . . . . . . .

Min/Max Area . . . . .

Limit Position . . . . . .

Edge Weights . . . . . .

Assign Cameras . . . .

7.3

7.4

7.5

.

.

.

.

.

.

.

.

.

.

.

.

93

93

93

94

94

94

94

94

94

95

95

95

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

95

Recognizing a Prototype . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Prototype-Relative Inspection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Prototype-Relative Part Acquisition . . . . . . . . . . . . . . . . . . . . . . . . . . . .

95

96

96

Performing Correlation Matches

. . . . . . . . . . . . . . . . . . . . . . . . . . .

97

Creating a Correlation Template . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Matching a Correlation Template . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

97

97

Performing Optical Character Recognition

98

Using Prototypes

Training an OCR Font . .

Font Planning . . . . . . .

Character Recognition . .

OCR Examples . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. . . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. .

. .

.

.

98

99

100

101

102

. . . . . . . . . . . . . . . . . . . . . . . .

104

.

.

.

.

.

.

.

.

.

.

104

105

105

105

106

. . . . . . . . . . . . . . . . . . . . . . . . . .

107

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

108

Prototype Model Switches and Parameters

7.7

Loading and Storing Vision Models

. . .

. . .

. .

. . .

. . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Programming AdeptVision VME

8.1

Introduction

8.2

Application Development Strategy

8.3

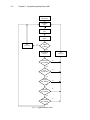

Inspection Vision Example Program

8.4

Developing the Program Code

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. . . . . . . . . . . . . . . . . . . . . . . . .

108

. . . . . . . . . . . . . . . . . . . . . . . .

109

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

111

Program Header and Variables Declarations

Set the Camera Environment . . . . . .

Acquire an Image and Start Processing

Locate the Object and Begin Inspections

Output the Results . . . . . . . . . . . .

Further Programming Considerations . . . .

8.5

.

.

.

.

.

.

.

.

.

.

.

.

. . . . . . . . . . . . . . . . . . . .

7.6

VSTORE . . . . . . . . .

VLOAD . . . . . . . . . .

Displaying Vision Models

Deleting Vision Models .

Renaming Vision Models

8

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

The Complete Inspection Vision Program

.

.

.

.

.

.

111

112

113

113

119

121

. . . . . . . . . . . . . . . . . . . .

122

. . . .

. . . .

. . . .

. . .

. . . .

. . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

vii

AdeptVision VME User’s Guide

The Main Program - inspect.part

Subroutine - line.line . . . . . . .

Subroutine - init.program . . . .

Subroutine - write.vwin . . . . .

9

.

.

.

.

122

128

130

131

Guidance Vision

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

133

9.1

Introduction

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

134

9.2

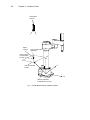

Using a Fixed-Mount Camera

9.3

4-Axis SCARA Robot with Camera Mounted on Link #2

. . . . . . . . . . .

138

9.4

5-Axis SCARA Robot with Camera Mounted on Link #2

. . . . . . . . . . .

143

9.5

Guidance Vision Program

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

145

The Sample Program . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

146

Further Programming Considerations

. . . . . . . . . . . . . . . . . . . . . . .

155

Error Handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Generalizing the Program . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

155

155

9.6

10

Advanced Operations

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. . . . . . . . . . . . . . . . . . . . . . . .

134

157

158

.

.

.

.

.

.

158

159

159

160

160

162

. . . . . . . . . . . . . . . . . . . . . .

162

Blob-Relative Inspection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Prototype-Relative Inspection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

162

164

10.2 Performing Frame-Relative Inspections

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Setting Vision Switches .

Viewing Switch Settings

Setting Vision Parameters

Viewing Parameters . . .

List of Switches . . . . .

List of Parameters . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

165

. . . . . . . . . . . . . . . . . . . .

166

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

167

10.4 Using a Vision-Guided Tracking Conveyor

Switches and Parameters

.

.

.

.

.

.

. . . . . . . . . . . . . . . .

10.3 Frame-Relative Inspections Using VDEF.TRANS

viii

.

.

.

.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

What is “High Speed?” . . . . . . . . . . . . . .

Using the Two Frame Store Areas . . . . . . . .

Using VPICTURE With Different Frame Stores

Using VDISPLAY With Different Frame Stores

Sample Code for a High-Speed Inspection . . .

The High-Speed Trigger . . . . . . . . . . . . . .

B

.

.

.

.

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

10.1 Performing High-Speed Inspections

A

.

.

.

.

.

.

.

.

.

.

167

167

167

167

168

171

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

175

Viewing VFEATURE( ) Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Establishing VFEATURE( ) Values . . . . . . . . . . . . . . . . . . . . . . . . . . .

175

175

VFEATURE( ) Values

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Table of Contents

C

D

Lens Selection

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

179

Formula for Focal Length . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Formula for Resolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

179

181

Lighting Considerations

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

183

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

183

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

183

.

.

.

.

.

.

.

.

.

.

183

184

184

184

184

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

185

Polarizing Filters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Color Filters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

185

185

D.1

Types of Lighting

D.2

Lighting Strategies

Diffuse .

Back . . .

Directional

Structured

Strobe . .

D.3

E

F

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Filtering and Special Effects

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

The DEVICE Instruction With Vision

187

191

. . . . . . . . . . . . . . . . . . . . . . .

191

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

193

Third-Party Suppliers

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

G.1

Third-Party Suppliers (U.S.)

G.2

Third-party Suppliers (Europe)

G.3

Third-Party Suppliers (Asia-Pacific)

Index

.

.

.

.

.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Using DEVICE With Vision

Examples

IX

.

.

.

.

.

Vision Window Menu

F.1

G

. . .

. . .

. .

. . .

. . .

199

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

199

. . . . . . . . . . . . . . . . . . . . . . . . . . .

204

. . . . . . . . . . . . . . . . . . . . . . . .

208

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

211

ix

AdeptVision VME User’s Guide

LOF

List of Figures

. . .

Typical AdeptVision VME System .

Sample Object . . . . . . . . . .

A Grayscale Image . . . . . . . .

A Binary Image . . . . . . . . .

Resolution Factors . . . . . . . .

Initial Screen . . . . . . . . . .

Sample Operation . . . . . . . .

Figure 4-4

.

.

.

.

.

.

.

.

Physical/Virtual Camera Relationship

.

VPICTURE Options . . . . . . . .

.

.

Display Mode Options . . . . . . .

Sample Vision Matrix . . . . . . .

.

Binary Representation of Sample Matrix .

Figure 4-5

Grayscale Representation of Sample Matrix

Figure 4-6

Sample Object

Impact and Trapping Hazards

Figure 1-1

Figure 1-2

Figure 1-3

Figure 1-4

Figure 1-5

Figure 2-1

Figure 3-1

Figure 3-2

Figure 4-1

Figure 4-2

Figure 4-3

Figure 4-13

. . . . . . . . . .

Switch and Parameter Example 1 .

Switch and Parameter Example 2 .

Switch and Parameter Example 3 .

Switch and Parameter Example 4 .

Switch and Parameter Example 5 .

Switch and Parameter Example 6 .

Switch and Parameter Example 7 .

Figure 6-1

Rectangular Area of Interest Shapes

Figure 6-2

Arc Shaped Area of Interest Shapes

Figure 6-3

Sample Area-of-Interest

Figure 4-7

Figure 4-8

Figure 4-9

Figure 4-10

Figure 4-11

Figure 4-12

Figure 6-4

Figure 6-5

Figure 6-6

Figure 6-7

Figure 6-8

Figure 6-9

Figure 6-10

x

. . .

Sample Image Buffer Regions .

Linear Ruler Example . . . .

Sample Gauge Face . . . . .

Arc Ruler Example . . . . . .

Ruler Types . . . . . . . . .

Line Finder Search Area . . .

Finder Tool Polarity . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. .

. .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

5

9

12

13

13

15

23

26

27

36

38

39

39

40

46

47

48

49

50

51

52

53

67

68

69

70

73

74

76

78

79

80

Table of Contents

Figure 9-1

.

.

.

.

.

Executing the VWINDOW Instruction

.

.

Executing a VFIND.LINE Instruction

Executing a VFIND.ARC Instruction .

.

Calculating the Object Tail Location .

.

Fixed-Mount Camera (Vision Location) .

Figure 9-2

Fixed-Mount Camera Vision Transformation

Figure 9-3

. . . . . . . .

Calculating the “Link2” Transformation .

Components of the Vision Location . . .

Final Part Acquire Location . . . . . . .

Five-Axis Vision Transformation . . . .

Example Program Setup . . . . . . . .

Pin- Pong Frame Grabbing . . . . . . .

Blob Relative Inspection . . . . . . . .

Camera Imaging . . . . . . . . . . . .

Camera Scale Factor . . . . . . . . . .

Figure 6-11

Figure 6-12

Figure 7-1

Figure 7-2

Figure 8-1

Figure 8-2

Figure 8-3

Figure 8-4

Figure 8-5

Figure 9-4

Figure 9-5

Figure 9-6

Figure 9-7

Figure 9-8

Figure 10-1

Figure 10-2

Figure C-1

Figure C-2

. . . . . .

VWINDOW Example . . . . .

Prototype Editing Operations . .

Font Similarity Matrix . . . . .

Application Flow Chart . . . . .

Line Finder Example

Link2 Coordinate Frame

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

82

83

90

98

110

114

117

118

129

136

137

139

140

142

143

144

145

159

164

180

180

xi

AdeptVision VME User’s Guide

List of Tables

LOT

Table 4-1

Image-Acquisition Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Table 4-2

Image-Acquisition Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Table 5-1

Boundary Analysis Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Table 5-2

Boundary Analysis Parameter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Table 5-3

VFEATURE Values and Interpretation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Table 7-1

Prototype Model Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

Table 7-2

Prototype Model Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

Table A-1

Vision Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 168

Table A-2

Vision Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

Table B-1

VFEATURE( ) Values and Interpretation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

Table C-1

Camera Scale Factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

Table D-1

Types of Lighting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

Table F-1

DEVICE Input/Output Format . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192

Table F-2

Vision Memory Allocation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192

Table G-1

Fiber Optic Lighting Suppliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

Table G-2

Lighting Suppliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

Table G-3

Camera Equipment Suppliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

Table G-4

Frame Splitter Suppliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

Table G-5

Camera Suppliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

Table G-6

Filter and Optics Suppliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

Table G-7

Lens Suppliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

Table G-8

Mounting Hardware Suppliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

Table G-9

Lighting Suppliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

Table G-10

Lens Suppliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205

Table G-11

Filter and Optics Suppliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205

Table G-12

Lighting, Filter, and Optics Suppliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

xii

Introduction

. . . . . .

How to Use This Manual .

Organization . . . . .

Before You Begin

. .

Related Manuals . . .

Safety . . . . . . . .

Compatibility

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Reading and Training for Users and Operators

System Safeguards . . . . . . . . . . .

. . . . .

. . . . . . . . .

How Can I Get Help?

Within the Continental United States .

Service Calls . . . . . . . . .

Application Questions . . . . .

Training Information . . . . .

Within Europe . . . . . . . . . . .

.

.

.

.

.

.

.

Outside Continental United States or Europe .

Adept Bulletin Board Service (BBS)

. . . .

Notes, Cautions, and Warnings

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2

2

2

3

3

4

4

4

5

5

5

6

6

6

6

6

6

2

Introduction

Compatibility

This manual is for use with V+ systems equipped with the AdeptVision software and hardware

options. The system version must be 11.0 or later.

This manual is intended primarily for vision application programmers. If your system includes the

optional VisionWare or MotionWare with vision software, you do not need to read this manual.

However, many principles of machine vision and AdeptVision VME processing are covered in

greater detail here than in the VisionWare or MotionWare user’s guides, so a general review of this

manual may be useful.

How to Use This Manual

Organization

Material in this manual is presented in a step-by-step fashion. Each chapter expands on and relies

on information in the preceding chapters. If you are new to machine vision systems, this manual

will take you from the conceptual basis for machine vision to advanced programming techniques in

computer vision applications. Here is what you will find in each of the chapters:

Chapter 1

presents an overview of machine vision principles and introduces vocabulary and

concepts you will need when reading the other chapters.

Chapter 2

shows you how to physically set up the AdeptVision VME system hardware.

Chapter 3

shows you how to perform all the initialization tasks necessary to get your system

ready to start developing vision applications.

Chapter 4

introduces vision processing. It describes how to acquire and process an image.

You will learn to fine-tune the images you produce so your vision applications

run as efficiently and predictably as possible.

Chapter 5

describes the first vision processing strategy, boundary analysis. You will learn