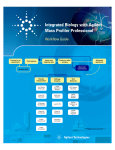

Download Metabolomics Workflow Guide

Transcript